The ability to extrapolate, or generalize beyond the data the neural network was trained on, is a critical characteristic of deep learning.

Neural networks are a set of algorithms that recognize patterns in data, such as images, text, audio or time series. They interpret these raw inputs by labeling or clustering them in numerical vectors.

Graph Neural Networks

The ability of neural networks to extrapolate to new data is an important aspect of deep learning. It makes it a powerful tool for a wide range of applications, from image recognition to natural language processing to scientific discovery.

For example, graph neural networks have helped computer vision by recognizing objects in images and videos. They’re also useful for NLP, where they help machines classify text.

Similarly, graph neural networks are used in chemistry to analyze the structure of molecules and compounds, helping scientists predict bond lengths, charges, and new compounds. These structures can be complex, so graph neural networks are equipped to handle them.

Graphs are non-euclidean structures, with a fixed number of vertices and edges that connect pairs of nodes. They provide a rich set of information about the relationships between nodes, which can be difficult to process with traditional models.

Feedforward Neural Networks

Neural networks extrapolate by using the patterns and relationships learned from the training data to make predictions about new, unseen data. This process is called learning and takes place through the backpropagation algorithm.

Feedforward neural networks are one of the most basic types of artificial neural network architectures. These networks have input and output layers as well as a number of hidden layers between them.

Each layer is connected to the previous layer by a set of weights. The weights are used to encode the knowledge of each layer.

These connections are not all equal, and each connection may have a different strength or weight.

When a feedforward network learns, it uses the inputs and weights from the previous layer to predict the outputs of the next layer. Then, it updates the weights and biases according to backpropagation.

Learning

Graphs are a natural way to model data because they describe the relationships (edges) between objects. They also allow for directionality, which helps explain the flow of information or traffic.

In neural networks, learning occurs by minimizing the difference between a given input and an expected output. During the learning phase, input patterns are presented to the network’s hidden units and weights are modified in each layer until it reaches the output layer.

The hidden units also pass the input-weight product through their activation function to determine how much further a signal must progress through the network to affect its final output. This can be thought of as “learning with a teacher” and is computationally costly, but it’s a necessary step that is only performed once.

A graph neural network (GNN) is a subtype of deep learning that operates naturally on data structured as graphs. They’ve become popular in areas like social network analysis and computational chemistry, especially for drug discovery. Despite being relatively new, GNNs have a promising future.

Extrapolation

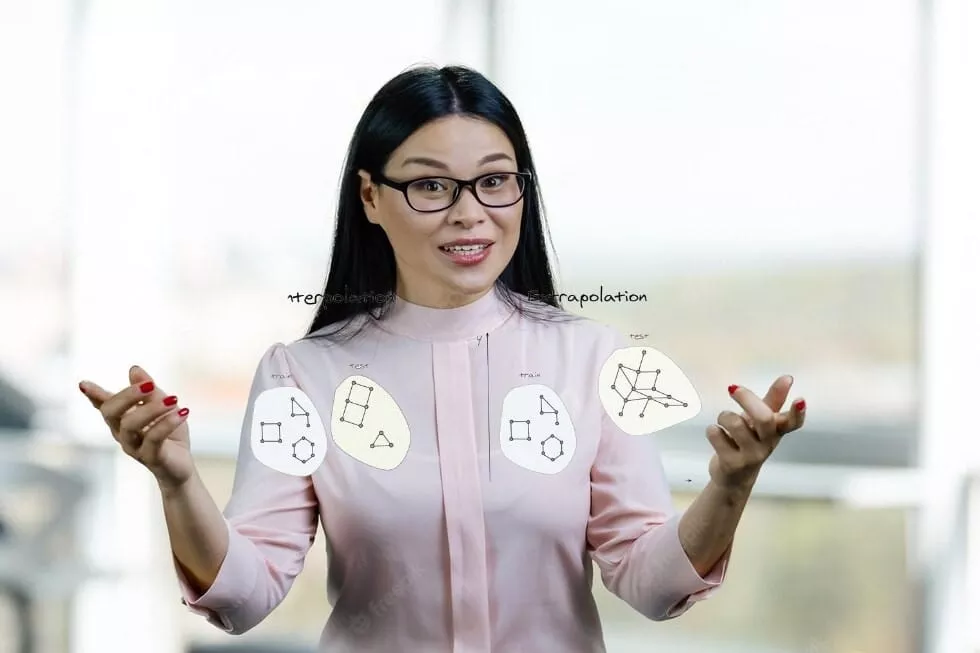

Extrapolation is the ability of a neural network to make predictions about new, unseen data that falls outside of the range of training data. This is different from interpolation, which involves predicting values that fall within the same data set.

In machine learning, extrapolation is the process of estimating a variable’s value beyond the training data range based on its relationship with another variable. It’s similar to interpolation, but it’s more uncertain and can result in meaningless results.

This ability is critical in many applications, from image recognition to natural language processing to scientific discovery. It also helps neural networks learn representations that capture the structural properties of graphs, allowing them to generalize to new graphs with similar structures and make predictions or classifications on new, unseen data.

One approach to achieving extrapolation in GNNs is to use a hierarchical message-passing scheme where nodes communicate with their neighbors to update their representations. By doing this, the model’s weights are constantly updated as new, unseen data is passed through each node.